Delivering AI success through seamless access to actionable and machine readable data

High quality data is at the heart of successfully training and deploying AI algorithms and agents. Our blog explains how organizations can ensure that they are sharing easily actionable, machine readable data with AI through data products and data product marketplaces

Artificial intelligence (AI) is transforming how organizations operate, innovate, and predict and meet changing customer and business needs. However, developing relevant, accurate and comprehensive AI models relies on relevant, accurate and comprehensive data. As has already been seen in a number of high-profile cases, training AI on poor quality or limited data risks inaccurate or even biased results, reducing trust in the technology and leading to failed projects.

Organizations looking to deploy AI internally by training models and agents therefore need to focus on collecting and sharing comprehensive, machine readable data in a seamless, accessible way. Implementing data products and a data product marketplace provides the key to achieving this effectively and efficiently, as our blog explains.

How data powers AI

Third-party AI models and tools, such as ChatGPT, were trained on freely available data from across the internet. While this meets some general needs, such as using generative AI to write an essay or some basic software code, it doesn’t meet the specific business requirements of individual organizations. They need to tap into their own data to power AI that is tailored to their own requirements, policies and processes if they are to deploy it effectively. That means organizations need to be able to generate and share machine readable data to:

- Train their own AI models from scratch

- Fine tune third party AI models to meet their specific needs

- Provide data and context to AI based applications as they operate

- Provide data and context to agent based systems that cover whole end-to-end processes

To be used for AI, data has to meet two clear criteria. Firstly, as for other applications, it has to meet quality standards, including being complete, relevant, consistent, uniform, trustworthy and accurate. Academic research from the University of Potsdam shows a clear link between incomplete, erroneous, or inappropriate training data and the creation of unreliable models that ultimately produce poor decisions.

Secondly, it has to be machine readable. Data is machine readable when it is structured in a format that can be easily processed by a computer without human intervention. That means including clearly understood metadata so that programs can “understand” the data, however they access it, such as through formats including CSV, JSON and XML or via APIs. A benefit of ensuring that data is machine readable through adding tags and metadata is that it should also make it easier for humans to comprehend, particularly if they were not involved in its original collection or creation.

Data and AI – a symbiotic relationship

As well as powering AI, data also benefits from the use of AI for analysis. AI can quickly ingest enormous volumes of information from different sources and find patterns and signals that may be invisible if using traditional business intelligence tools, unlocking data’s full value. Predictive AI analytics can accurately forecast future events, such as demand for products moving forward. For example, AI analysis of sales data could be used to optimize sales approaches, suggesting hyper-personalized products and services to individual customers at scale.

The essential components for successfully sharing data with AI

Alongside being machine readable and high quality, data for AI has to be accessible and trustworthy. Essentially, AI models and agents need to be able to discover and ingest relevant data quickly and easily – and be confident that it meets their requirements. Given that data is often scattered across multiple systems and silos, making it available to AI can be a time-consuming and complex process, slowing down adoption.

At the same time organizations must ensure that data is accurate, reliable and meets their internal governance standards. It should be used in ethical, secure ways that protect privacy and avoid the risk of accidental bias within AI outputs.

All of this means that organizations need to put in place three interlinked components to effectively share their data with their AI models and agents – data products, data contracts, and data product marketplaces.

Data products

A data product is a high-value data asset that has been created to meet a specific business need for a large number of users, and contains everything required to consume that underlying data. It is continually monitored and updated – it is not a one-off solution such as a report. Data products can be used by business employees without technical data skills and equally by AI models and agents.

Data products remove complexity from data consumption by integrating multiple data sources, interfaces and metadata into a single, accessible product. They therefore eliminate the requirement for AI or humans to interact with multiple different data sources in order to find and consume the data they require.

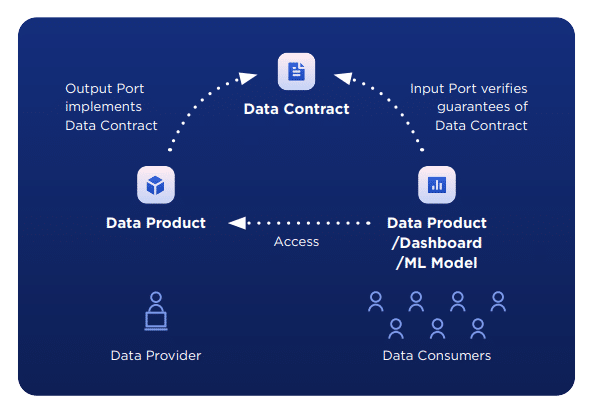

Data contracts

Data contracts are an integral part of data products. They are a formal agreement that defines how data is structured, formatted, and communicated between different components of a data system. Data contracts provide an SLA around a specific data product and its use, ensuring and enforcing data consistency, reliability and compliance.

Effectively a data contract acts in the same way as the contract between a buyer and a seller on a consumer e-commerce marketplace. Data contracts provide end users

with a precise agreement regarding what the data product owner intends to deliver and how the data product should then be used, building trust between all parties. They are standardized and provided in machine readable formats, allowing them to be understood and used by both AI and human users. AI can also be used to verify that the data contract is aligned with the content provided within the data product, increasing confidence for data users and building greater trust.

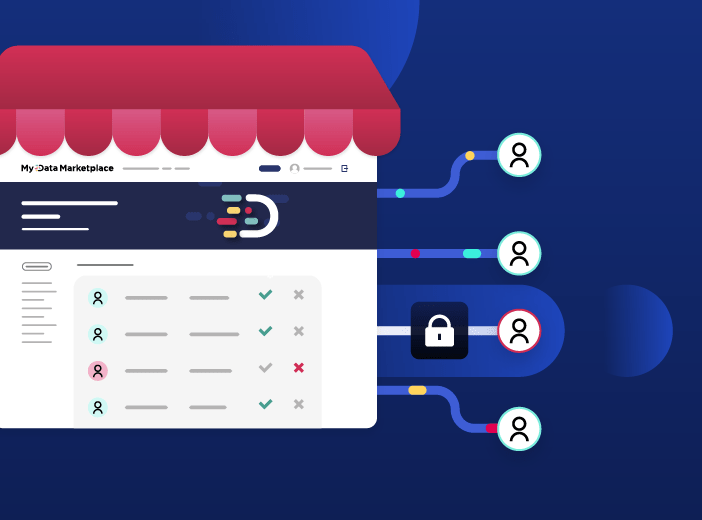

Data product marketplaces

Data product marketplaces are the optimal solution for sharing data products. Intuitive, self-service and centralized solutions, they are designed specifically to meet the needs of data producers, consumers, and governance teams. They automate the delivery, availability and consumption of data assets, especially data products, thus driving their consumption.

A data product marketplace enables organizations to build and share data products, along with their associated data contracts, making them available to all. By centralizing data and making it available in easily consumable, machine readable ways, it can be seamlessly discovered and ingested by AI models and agents, as well as being incorporated into AI systems by developers. It provides a one stop shop for data for everyone, from non-technical business users to AI, while ensuring governance, security and compliance of data.

Essentially, by building a marketplace that is made for humans, such as by using Opendatasoft’s technology, organizations ensure it is relevant for AI. The result? Greater data consumption across the business to deliver increased efficiency, productivity, innovation, collaboration and the creation of new services and revenue streams.

Increasing AI use through data product marketplaces

As businesses implement AI across their organization, they need to ensure that they have easy access to high-quality, machine readable data to drive success. The combination of data products, data contracts and data product marketplaces underpin AI adoption by delivering data in well-governed, understandable and consumable forms. This enables businesses to empower both AI and humans, harnessing the benefits of data in order to deliver on their key objectives.

Want to learn more about Opendatasoft’s data product marketplace and our unique, API-driven approach? Contact us to learn more.

It can be hard to understand exactly what a data product is, given the many ways that the term is defined and applied. To provide clarity this article provides a business-focused definition of a data product, centered on how it makes data accessible and usable by the wider organization, while creating long-term business value.